Discussion of the artificial intelligence language model ChatGPT seems impossible to escape these days.

Examples of AI making humans uncomfortable abound, especially considering children and schools. The massively unpopular Snapchat My AI chatbot prompted a barrage of single-star reviews and warnings to parents from local law enforcement. Schools are already plagued by cheating, which seems to be a chief argument against ChatGPT in classrooms (although its touch can be detected). Ultimately, artificial intelligence is the opposite of thought leadership. It’s no wonder we feel the temptation to underestimate the impact of AI in school communication.

However, AI exists to be useful, like any other tool. It has the potential to help us do amazing things in school districts and beyond—evidenced by widely trusted edtech pioneer Sal Kahn’s foray into AI as a guide for students. Its insight may even prove useful to district leaders deciding how to handle AI in their schools.

When asked how a business should use ChatGPT, the AI did its job well and responded with thought-provoking answers.

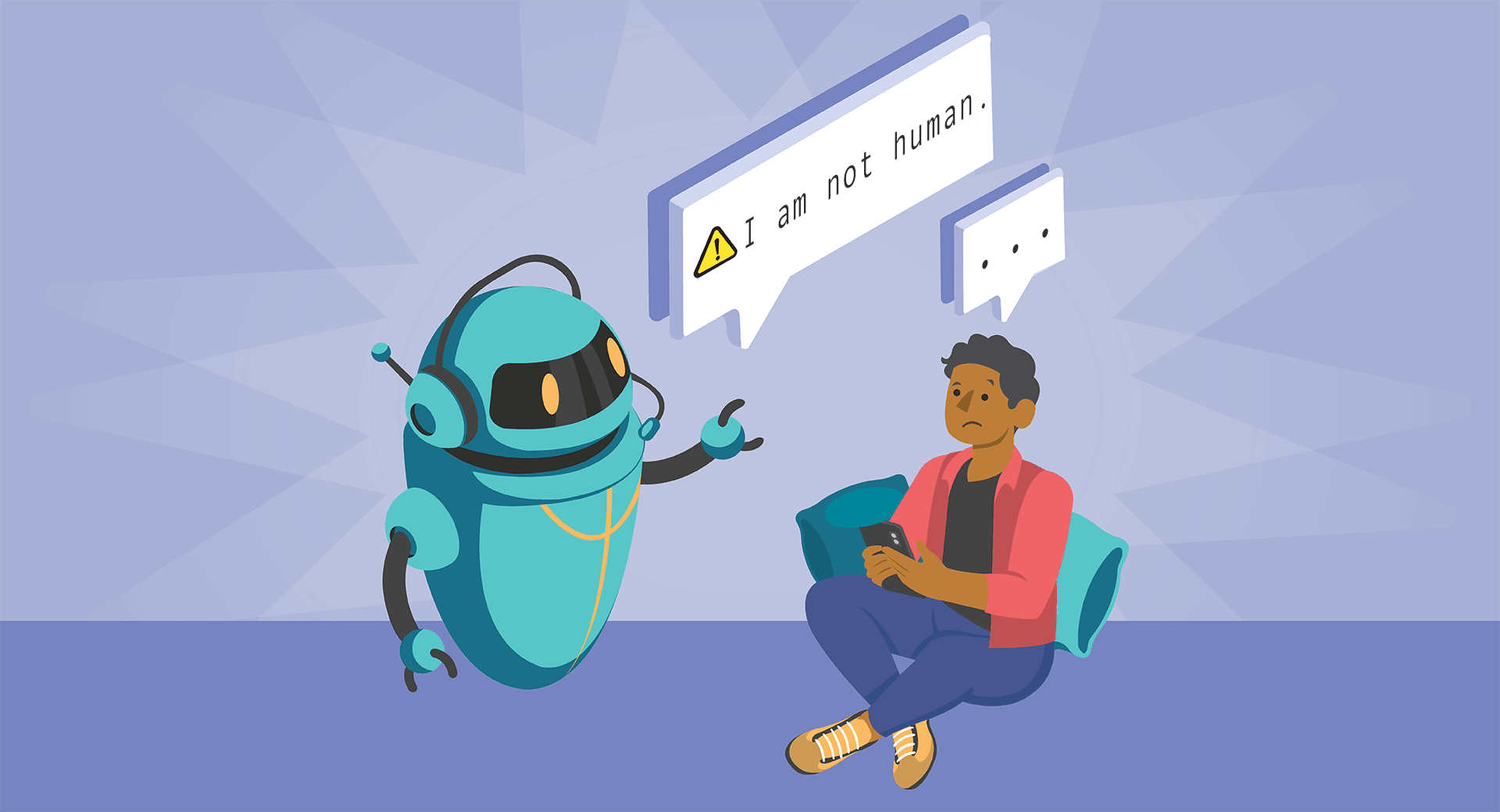

I am not human.

“Firstly, relying solely on a language model for communication can lead to a loss of human touch.”The human touch the AI is referring to is both easy and hard to describe. The human touch feels like fallibility, humility, design thinking, mistakes, and imperfections. It is the spirit of collaboration. It is teamwork and dreamwork. It’s also the wisdom to understand the nuances of communication: the differences between fact, rhetoric, opinion, and belief. The wherewithal to understand that not everything we read is true, and the education to identify what is research-based, peer-reviewed, and fact-checked vs. what is simply written.

Using AI to detect AI is not flawless either. In fact, even a paid model seems slightly more prone to credit AI even when humans generate the content. (A free model was closer to 60% accurate.)

The definition of reality is set by humans. Artificial intelligence is by default derivative of all the knowledge we’ve already discovered. The new horizons of thinking will come from humans adding knowledge, not AI thinking up something new.

The lesson: Think of AI as a tool, nothing more.

I am not secure.

“Secondly, the use of a language model raises concerns about data privacy and security.”The robot tells on itself. Listen.

By design, the AI takes in the data humans provide, keeps it, and creates answers based on what it has learned. It’s not in the business of forgetting confidential information and will build upon what it has been taught.

It will not evaluate its answers for objective accuracy or for security.

The lesson: Never tell an AI confidential information.

I am inaccurate.

“Thirdly, while I am capable of generating a large amount of content, the quality and accuracy of that content may vary.”Logic dictates what the model returns. You may have heard this described in computer science terms as garbage in, garbage out. The AI learns what people teach it, and if people teach it poorly it will produce increasingly twisted results. It can only learn what it’s taught.

Plenty of people can talk a lot and say nothing, and this AI can too. It might not be fact, although we’re tempted to think of machines as infallibly accurate.

Except it’s not. Google Bard’s inaugural demo inaccurately claimed the James Webb Space Telescope took the first pictures of exoplanets when it was actually the European Southern Observatory in 2004 (confirmed by NASA). ChatGPT’s limitations may also prevent it from answering prompts accurately to human knowledge unless they’re phrased very specifically—one example inquires how many countries start with the letter V, and the chatbot doubles down on its answer of zero (turns out Vietnam, Vatican City, and Venezuela don’t fit the definition of “country”—a rather pedantic distinction the average non-geographer would probably not make).

We already know how dangerous and isolating algorithms can be. To clarify, the AI itself is not inherently bad or evil. It is simply providing a culmination of what humans have taught that particular engine. In the hands of trustworthy and ethical experts, the potential is limitless and ostensibly good—but there are no guarantees. An AI is not going to temper bias; on the contrary, AI is already being used to radicalize.

The lesson: Always fact-check with real sources from humans.

I am not going away.

AI is not going anywhere. The question is, what knowledge are you going to provide it with?A discerning reader may notice the ChatGPT language model is more human than we’d like to think it is: humans are notoriously inaccurate and pose the biggest threat to data security. So what’s the difference really, between AI and humans, in terms of the danger of concocting and spreading misinformation and mishandling private data? (Training humans can help, for one.)

At least in school districts, the major difference might just be the ability to value the truth, each other, and humanity in general. Tread lightly.

Follow-up resource: Should school districts ban apps?

Find out more and decide for your district.WHAT'S NEXT FOR YOUR EDTECH? The right combo of tools & support retains staff and serves students better. We'd love to help. Visit skyward.com/get-started to learn more.

|

Erin Werra Blogger, Researcher, and Edvocate |

Erin Werra is a content writer and strategist at Skyward’s Advancing K12 blog. Her writing about K12 edtech, data, security, social-emotional learning, and leadership has appeared in THE Journal, District Administration, eSchool News, and more. She enjoys puzzling over details to make K12 edtech info accessible for all. Outside of edtech, she’s waxing poetic about motherhood, personality traits, and self-growth.